Report on Data Security: Failure at Facebook

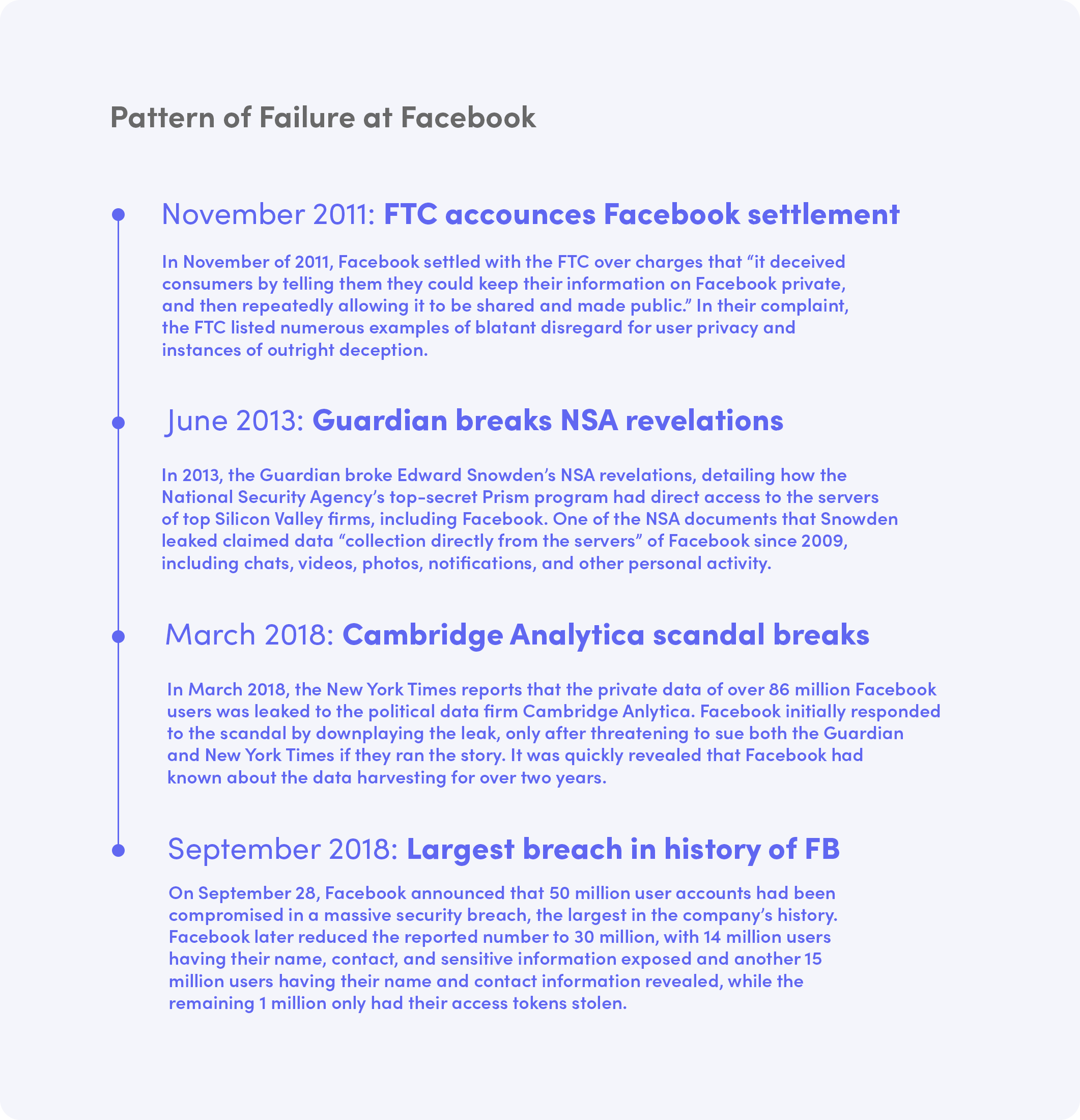

On September 28, Facebook announced that 50 million user accounts had been compromised in a massive security breach, the largest in the company’s history. Facebook later reduced the reported number to 30 million, with 14 million users having their name, contact, and sensitive information exposed and another 15 million users having their name and contact information revealed, while the remaining 1 million only had their access tokens stolen.

For 14 million that meant name, phone number, email, username, gender, locale, relationship status, religion, hometown, current city, birthdate, device types, education, work, website, people and pages they follow, 15 most recent searches, and the last 10 places they checked into or were tagged in on Facebook.

Not only were accounts compromised, but hackers were potentially able to gain access to any other third-party app, service, or website that those users had logged into using Facebook. The hack thus gave the attackers access to additional accounts on tens of thousands of other sites, including Instagram, Tinder, Spotify, and AirBnB, making the breach not only the company’s largest to date but also its most severe.

For the full report, please read below.

Table of Contents

- The Announcement

- Discovering Vulnerabilities

- The Launch of Facebook Connect

- Single Point of Failure

- Misaligned Incentives

- The Cost of Connectivity

- The Cambridge Analytica Scandal

- A Shifting Dialogue on Data Security & Privacy

- A Pattern of Failure

- An Uncertain Future

The Announcement

As the announcement was made, the full scope of the breach was still unknown. Neither did they know who the attackers were, how they taken advantage of their access, nor the full breadth of the attack. Third-parties scrambled to get details from Facebook, unable to provide their users with much information at all.

A few days later a spokesperson for Facebook told Wired that “we’re still doing the investigation to see if these attackers did get access to those third-party apps.”

Discovering Vulnerabilities

Soon, more information was revealed on how the hack occurred. There were three security vulnerabilities that the hackers exploited. These vulnerabilities were introduced in July 2017, two in the “View As” feature, as well as a bug in a video-uploading feature for birthdays, allowing the attackers to siphon off access tokens for 30 million accounts over the course of over a year. Access tokens are keys that provide access to the platform and linked apps. By acquiring user access tokens, attackers could then login to the Facebook accounts and access any other third-party account that they used Facebook Login for. Facebook Login, the company’s single sign-on service, allows Facebook users to login to other sites, platforms, and applications around the internet using their Facebook credentials instead of having to create new accounts for each one.

Media response to the breach was swift, with the New York Times running an article with the headline “Why You Shouldn’t Use Facebook to Log In to Other Sites.” Wired called it a “historic data breach” and an “internet-wide failure.” Concerns about the security of, and vulnerabilities in, Facebook’s single sign-on service had been mounting for months. In April, Wired wrote of the security risks of Facebook login and its rapid consolidation of the single sign-on market, saying that “Facebook Profiles have become the de-facto identities of people across the internet.” What happens when the de-facto identity provider for billions of users worldwide is compromised?

The Launch of Facebook Connect

Single sign-on allows users to login to multiple services with the same credentials. One of the major benefits of single sign-on is that it obviates the need to remember usernames and passwords for dozens of additional platforms, reducing password fatigue and streamlining the sign-up and login process. It is often far more convenient, and even in some ways more secure, for many users who do not have the technical know-how or time to make sure they are creating unique and secure passwords for every online account to instead use a single identity provider when creating and logging into accounts online.

Many sites also don’t have the resources necessary to implement stringent security mechanisms on their platforms, thus providing convenience to those providers who can outsource that work to Facebook. These advantages, for both users and providers, have led to the precipitous rise of SSO. Originally introduced as “Facebook Connect” in July 2008 at the company’s F8 developer conference, Facebook Login has quickly come to dominate and consolidate the single sign-on provider market. Facebook credentials are used for authenticating users on other platforms, and Facebook provides a set of authentication APIs for developers who can then integrate the service.

Single Point of Failure

Upon its release, the New York Times quite presciently wrote, “Facebook, the Internet’s largest social network, wants to let you take your friends with you as you travel the Web. But having been burned by privacy concerns in the last year, it plans to keep close tabs on those outings.” However, single sign-on also presents a highly visible and rewarding single point of attack, and with Facebook being such a large platform, and utilizing breathtaking amounts of code, it is nearly impossible to prevent, find, and address every single security vulnerability.

Facebook held the keys to thousands of platforms and services, all of which were compromised by one single hack that exploited three critical vulnerabilities, elevating what was already an alarming attack into one that has wide-ranging consequences reaching into nearly every corner of the online world.

In August of 2018, researchers from the University of Illinois, in a report titled “O Single Sign-Off, Where Art Thou?”, addressed this very concern.

In their paper, the researchers highlighted not only the security pitfalls of current incarnations of single sign-on, and Facebook in particular, but also how hard it is to fully revoke access to third-party services once an account has been hijacked, showing that “the vast majority of RPs [relying parties] lack functionality for victims to terminate active sessions and recover from such an attack.” As such, they concluded that, “Due to the proliferation of SSO, user accounts in identity providers are now keys to the kingdom and pose a massive security risk. If such an account is compromised, attackers gain control of the user’s accounts in numerous other web services.”

There are a wide-range of other security issues that come with having a single custodian of your login identity, one of which being that it can also increase the severity of phishing attacks. If a user exposes their credentials as a result of phishing, it gives the attacker access to all connected apps as well, ratcheting up the damage. In research published by the IEEE Computer Society, researchers concluded that “the overall security quality of SSO deployments seems worrisome.” Along with general concerns over the security of SSO services, Facebook Login has been the target of numerous exploits and attacks.

In April of 2018, researchers at Princeton’s Center for Information Technology Policy, released a paper detailing the ways that data was being scraped and linked to personal identifiers using Facebook’s Login API. The researchers found that third-party tracking scripts were collecting and siphoning off personal information through Facebook’s Login API without their knowledge. In total, seven third-parties had trackers embedded on sites that use Facebook Login, which then pulled usernames, email addresses, birthdays, and other private information when users granted the websites access to their Facebook profiles.

The scripts were then able to link unique IDs, which are specific to each application, with Facebook profiles, allowing the sites to track individual users across additional websites, in effect deanonymizing them. Meaning that not only were users sharing their information with the website, application, or platform that they were allowing to access their Facebook profile, but also with unknown third-parties who intercepted the information and then used it to further track their online behavior. These scripts were found on over 400 websites.

As for September’s security breach, it comes as Facebook is facing increased scrutiny by lawmakers, regulators, and privacy advocates over its collection, use, and protection, or lack thereof, of user data. With over 2.2 billion users worldwide, a user-base without historical rival, Facebook presents a single point of attack of immeasurable reward, and any security vulnerabilities or unscrupulous data practices have worldwide ramifications.

Never before has a single company been in possession of and responsible for the safeguarding of such a colossal amount of personal information. As such, a compromise on a single platform such as Facebook has wide-reaching and damning ramifications for hundreds of millions, if not billions of users, on tens of thousands of platforms and applications. A single security failure then ripples out, potentially compromising and exposing vast amounts of accounts and data on billions of individuals, a result of an intimately interconnected online landscape.

Misaligned Incentives

As Facebook grew, looking for ways to monetize the platform in the process, extremely permissive access to, and use of, user data became the status quo, helping the company generate billions in revenue and providing a viable business model in the process. As its user base, business pages, and linked applications grew precipitously, Facebook was forced to always be playing catch-up, announcing more evolved privacy control, practices, and policies seemingly only in the wake of controversy and retroactively addressing privacy concerns once they had reached a tipping point, even as their porous data policies drove developers to the platform in prodigious numbers.

It wasn’t until after Facebook began sharing data with third-party developers that a team was put in place to check and monitor for misuse of data. Even then, when an operations manager at Facebook, Sandy Parakilas, suggested in 2011 that Facebook conduct an internal audit of the use of data by third-parties after discovering a startling amount of data collection that users were unaware of, he was quickly shutdown. While behind the scenes Facebook has been hesitant to curb data collection, they have continued to make public pronouncements in the name of user privacy and security, which the company has rarely lived up to.

In April of 2014, Facebook announced Anonymous Login at their f8 developers conference, which was championed as a “brand new way to log into apps without sharing any personal information from Facebook.” Zuckerberg, in his keynote address, said, “We know that some people are scared of pressing this blue button. We don’t ever want anyone to be surprised about how they’re sharing on Facebook, that’s not good for anyone.” Despite the showcase announcement and prime spot it was given at the conference, four years later the feature has yet to materialize. Of course, targeted at developers, interest was lacking from the beginning, given lack of incentives to foster its adoption and the fact that most had no interest in voluntarily turning off the data spigot they had been reaping vast rewards from for years.

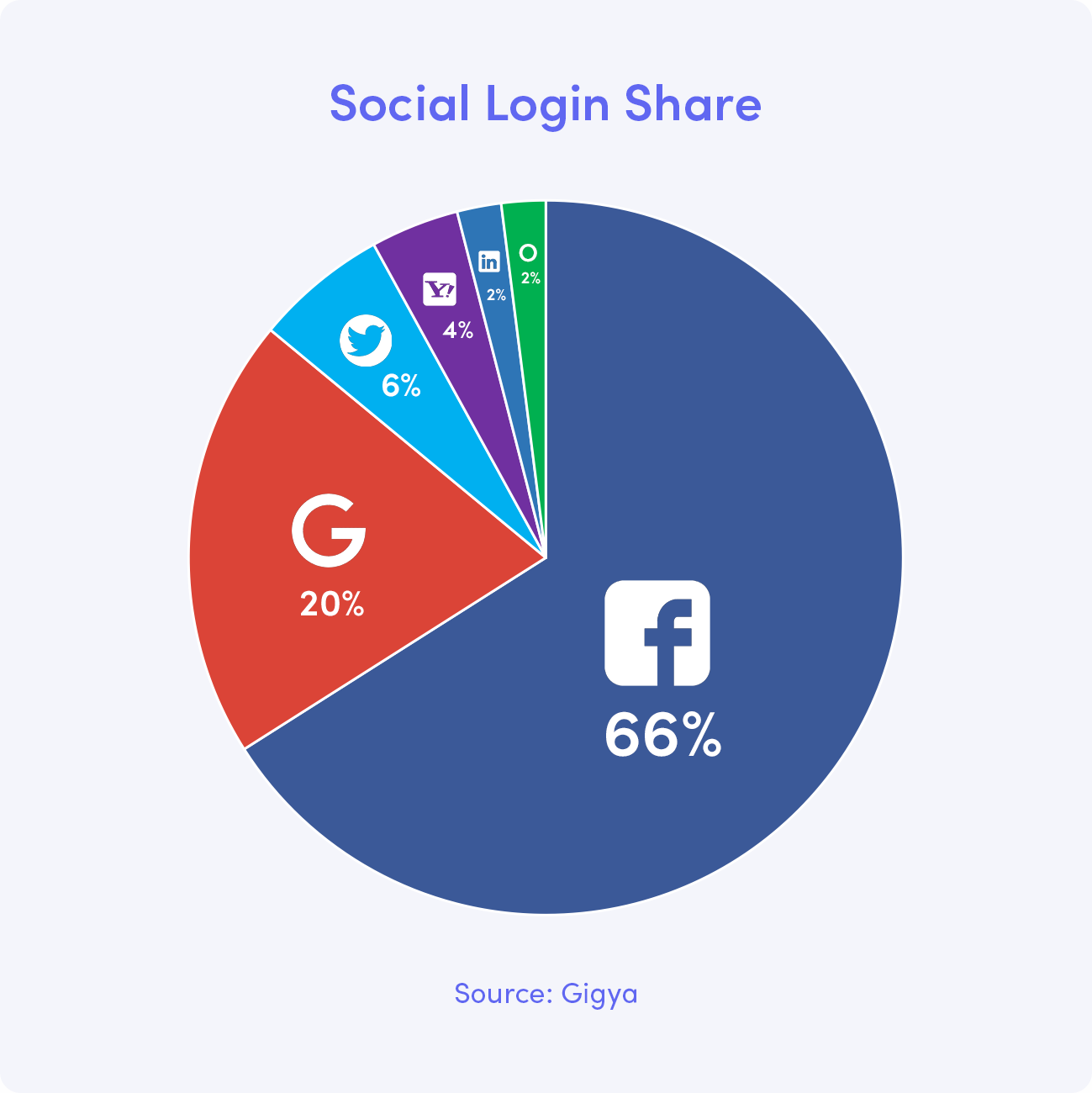

The benefits to Facebook providing single sign-on are clear, cementing Facebook as the defacto online platform, tying users even more deeply to their use of the service, further cementing Facebook as the gatekeeper to the internet, and making it nearly impossible for users to leave the Facebook ecosystem. According to Datanyze, 352,727 unique domains use Facebook Login, giving Facebook a 46.90% share of the login market. As of 2015, according to Gigya, Facebook accounted for 66% of all social logins and 77% of mobile social logins.

The Cost of Connectivity

While at the time of its release Facebook had only 100 million users worldwide, Login helped to catapult the company forward on its monumental rise to over 2 billion users worldwide by 2017. Until that point, Facebook had mostly served niche users bases, specifically high school and college students. In 2008, concerns around digital privacy and data security barely existed, and students were happy to agree to questionable terms of service, no matter how erroneous, in return for all the new benefits that social media provided.

Facebook’s growth imperative, and the logic of relinquishing privacy in the name of increased connectivity and access to innovative digital services, was one that came but just briefly under scrutiny in 2016, when an internal memo written by Facebook Vice President Andrew Bosworth was leaked to BuzzFeed. In the memo, Bosworth wrote that growth came before all else, and that in the name of connecting people all potentially negative second-order effects were justified.

From this perspective, it is easy to see how, in the name of growth and connection, Facebook was more than willing, and able, to rationalize the sweeping of user privacy and data security concerns under the rug in its quest for global reach. If personal privacy is infringed upon, if breathtaking levels of data collection and sharing are necessary, and even if company practices and policies lead to physical or mental harm, the ends justify the means.

It is this incessant need for growth that has vaulted Facebook to over 2.2 billion users worldwide and a staggering $457 billion market capitalization, and allowed Zuckerberg to amass a $62.4 billion fortune along the way. As Katherine Losse, Zuckerberg’s former speechwriter, explains, “Scaling and growth are everything, individuals and their experiences are secondary to what is necessary to maximize the system.” For it is often in the interest of companies, and their revenue streams, to continue to collect as much data as possible as free from privacy and security constraints as possible.

In the case of Facebook, it is their advertising revenue and developer ecosystem that are dependent on the mass unencumbered trawling of data. Of course, these incentives are far from aligned with those of the users, who also by nature lack the power to combat corporations who have the financial, legal, and lobbying resources necessary to keep these users at bay.

The Cambridge Analytica Scandal

It was this policy and culture at Facebook that set the stage for the Cambridge-Analytica scandal, in which the private data of over 86 million users was leaked to the political data firm. Facebook initially responded to the scandal by downplaying the leak, only after threatening to sue both the Guardian and New York Times if they ran the story. It was quickly revealed that Facebook had known about the data harvesting for over two years and had tried to quietly get the firm to delete the data, without ever warning users that their personal information had been siphoned off.

The Guardian had first broken news of the use of “harvested data on millions of unwitting Facebook users” by a data company embedded within Ted Cruz’s presidential campaign in December of that year. In their report, the Guardian was clear that the data had been collected without users’ knowledge or permission. A Facebook spokesman at the time said that “misleading people or misusing their information is a direct violation of our policies and we will take swift action against companies that do.”

A year and a half later, in March of 2017, the Intercept released its own investigative report expanding further on the data harvesting operation. However, Facebook failed to act until the New York Times broke further revelations in March of 2018. In response to the New York Times report, Paul Grewal, Vice President and Deputy General Counsel for Facebook, released a statement that Cambridge Analytica, Christopher Wylie, and Aleksandr Kogan would be immediately suspended from Facebook, and that Facebook was taking “whatever steps required” to see that the data was deleted.

While Facebook was all too eager to point out it was not an outright breach of security, the Cambridge Analytica scandal speaks to a more insidious culture at Facebook, one that prevailed for years until increased scrutiny forced the company to take user privacy seriously. Operating with lax data privacy policies, Facebook was content with letting user data be collected, mined, and further disseminated by third-parties nearly unfettered for years. And ultimately, Facebook Login was also at the heart of Cambridge Analytica debacle as well.

Facebook’s connect feature was exploited by Cambridge University professor Aleksandr Kogan with the development of his “thisisyourdigitallife” quiz app. Roughly 270,000 Facebook users used Facebook Login in order to access the quiz app, sharing their personal information with the developer in the process. Not only did Kogan gain access to the data of users of the app, but also to all of their Facebook friends as well, completely unbeknownst to them, a result of Facebook playing fast and loose with access to and sharing of private profile information. All told, this allowed Kogan to siphon off the personal data of 86 million Facebook users, all of which was in full compliance with Facebook’s terms of service and developer guidelines at the time.

Of course, the data was collected under false pretenses, with Kogan stating it was for the purposes of academic research. Kogan began harvesting data in 2014, spending roughly $800,000 to collect data on the 86 million users. Of those 86 million, only 270,000 had provided consent, although those who did were also unaware of the more nefarious purposes of the app. To make matters worse, the harvested data was also stored unsecurely, as a former Cambridge Analytica employee reported to the New York Times that “he had recently seen hundreds of gigabytes on Cambridge servers, and that the files were not encrypted.”

What made the revelation such a bombshell was the manner in which the data was then used, far removed from what users originally consented to. As news broke on March 17, 2018 with coordinated reports from the New York Times, Guardian, and Observer, users quickly became aware of how their data was being put to use without their permission or knowledge. Cambridge Analytica had hoped, whether they were able to actually accomplish it or not, to identify the personality traits of voters and then use those psychographic profiles to influence and manipulate the American electorate. Fallout was swift, setting the stage for Congressional theatrics and widespread outrage, and finally providing for a profound moment in the collective consciousness for issues pertaining to consumer data and digital privacy. Facebook’s stock price fell precipitously, shedding over $50 billion in value in a matter of days.

Setting up an uncomfortable conversation in the wake of Facebook’s latest breach, Zuckerberg took out full-page ads in multiple major American and European newspapers a week later, with the headlines reading “We have a responsibility to protect your information. If we can’t, we don’t deserve it.” A responsibility and promise that was yet again broken in September. All the while, top executives were quick to displace blame and displayed an obstinate inability to take responsibility, instead deferring to how the collection was completely within Facebook’s guidelines at the time.

For all of the outcry, Facebook faced little in the way of formal legal or financial ramifications. It wasn’t until October that the UK’s privacy watchdog, the Information Commissioner’s Office, fined Facebook $644,400. Information Commissioner Elizabeth Denham said in a statement, “Facebook failed to sufficiently protect the privacy of its users before, during and after the unlawful processing of this data. A company of its size and expertise should have known better and it should have done better.”

What Cambridge Analytica showcases is the extent to which data is harvested and mined on a daily basis by tens of thousands of entities in an attempt to influence and manipulate the behavior and decision-making of individuals, and the lengths a whole array of firms will go to in order to obtain that data. At the same time, the response from industry was telling, as even seemingly forward-looking Silicon Valley giants like Google and Apple often only take data security and privacy serious as a matter of expedience when the outcry from consumers or the media grows to such a fever pitch that they can no longer afford to sit idly by. Facebook, and the tech industry in general, has failed time and time again to regulate itself.

A Shifting Dialogue on Data Security & Privacy

As a result of the Cambridge Analytica scandal, Facebook, which had up to that point been unwilling to voluntarily implement GDPR safeguards outside of the European Union, quickly backpedaled, with Zuckerberg telling reporters on April 4, “We’re going to make all the same controls and settings available everywhere, not just in Europe.” Although in a subtle hedge Zuckerberg conceded that these controls wouldn’t be synonymous with their European counterparts, leaving room for Facebook to tweak and change their policies and settings as needed.

A week later, Zuckerberg was testifying in front of Congress, spending two hearings defending Facebook and responding to questions pertaining to the company’s handling of user data. Senators were quick to point out Facebook’s breach of user trust, remarking that it would be hard to trust the company to regulate itself given continued failures to live up to its promises. The hearings provided for a potential watershed moment, as millions of citizens, and dozens of media outlets, watched as privacy rights were elevated to the national dialogue, and for the first time the unquestioned logic of relinquishing privacy in the name of increased digital innovation and connectivity, which has always been positioned as a necessary trade-off, was subjected to repeated questioning on the national stage.

Senators also zeroed in on fundamental questions of transparency and trust, focusing on investigative reports that had alerted Facebook to Cambridge Analytica as early as 2015, questioning why the company had failed to inform users over two years earlier. Following the Cambridge Analytica scandal, Facebook decided to leave an anti-privacy alliance that it had joined to fight California’s Consumer Privacy Act. Facebook had previously donated $200,000, along with Google, Comcast, AT&T, and Verizon, to the alliance that was setup to fund lobbying and ads opposing the legislation.

In July, on news of stagnant user growth in the North America and a slight contraction in Europe, Facebook’s market cap shed over $119 billion in a single day. Revenue forecasts were also down due to new features allowing users to opt-out of specific information being collected by Facebook. If there was going to be an expedient for proactively enhancing user privacy, Cambridge Analytica provided one.

A Pattern of Failure

Facebook was playing fast and loose with user data as far back as 2011, when, in one of its first major privacy debacles, in November of 2011, Facebook settled with the FTC over charges that “it deceived consumers by telling them they could keep their information on Facebook private, and then repeatedly allowing it to be shared and made public.” In their complaint, the FTC listed numerous examples of blatant disregard for user privacy and instances of outright deception, including telling users that third-party apps could only access specific profile information when, in fact, third-party apps could access all of the users’ data and that of their friends as well.

It was this free flow of user data to connected apps that Cambridge Analytica would exploit a full three years later. The FTC settlement required Facebook to obtain explicit consent from users before their information was shared, a requirement it was clearly still not in compliance with by the beginning of 2015. As such, in light of the Cambridge Analytica fiasco, the FTC announced that it was opening a new investigation into whether Facebook had violated the 2011 consent decree, with financial exposure potentially running into the trillions of dollars.

While the FTC settlement came in November 2011, it wasn’t until April 28, 2015 that Facebook updated its API to prevent third-party apps from accessing users' friend data, a move that ironically came in tandem with a new company slogan, “People First.” The release of Facebook’s new Login API also provided users with more granular control of the data that was shared with developers, forcing developers to show users exactly what information and permissions they were giving the app and giving users a limited degree of control over that sharing. Facebook was more than happy to later announce that the change boosted its login conversion rate by 11 percent. While lauded, it came years too late. And it wouldn’t be until nearly 3 years later, or 11 days after the Cambridge Analytica story broke, that the company would announce the introduction of any additional data and privacy controls.

It is important to note that it wasn’t just developers that Facebook gave copious amounts of user data, but governments as well. In 2013, the Guardian broke Edward Snowden’s NSA revelations, detailing how the National Security Agency’s top-secret Prism program had direct access to the servers of top Silicon Valley firms, including Facebook. One of the NSA documents that Snowden leaked claimed data “collection directly from the servers” of Facebook since 2009, including chats, videos, photos, notifications, and other personal activity. Facebook denied the allegations and said they had no knowledge of the program.

Of course, collection on this scale and magnitude is only possible when data is centrally stored and not encrypted by the end-user. As Snowden wrote in 2013, “Know that every border you cross, every purchase you make, every call you dial, every cell phone tower you pass, friend you keep, article you write, site you visit, subject line you type, and packet you route, is in the hands of a system whose reach is unlimited but whose safeguards are not.” Snowden was writing about the NSA, but it is centralized platforms and data repositories that make this dystopian reality possible. It applies to Facebook all the same, an entity that knows no bounds on the it user data it collects and omniscience it seeks.

An Uncertain Future

Since March, criticism of Facebook has been constant and unrestrained. In September, just prior to news breaking of the massive access token and single sign-on breach, Evan Osnos wrote a scathing profile on Facebook for the New Yorker, remarking on the stark dichotomy between the acute lack of privacy on the platform and the deep need of its founder for his own privacy. “Facebook capitalized on a resource that most people hardly knew existed: the willingness of users to subsidize the company by handing over colossal amounts of personal information, for free,” wrote Osnos. It was this resource, unfettered access to and accumulation of personal data, that fueled Facebook’s rise and could potentially be its downfall.

In the wake of September’s breach, calls for action quickly came from US and European policymakers. Senator Mark Warner said in a statement that “a full investigation should be swiftly conducted and made public so that we can understand more about what happened” and that “today’s disclosure is a reminder about the dangers posed when a small number of companies like Facebook and the credit bureau Equifax are able to accumulate so much personal data about individual Americans without adequate security measures.” Online, the #DeleteFacebook campaign regained momentum and user growth has begun to stall.

Ireland’s Data Protection Commission, Europe’s top data regulator, has requested more information from the company and could potentially pursue fines up to $1.63 billion under the EU’s new General Data Protection Regulation. Under GDPR, which went into effect in May, companies are required to notify regulators of data breaches within 72 hours of being detected and can be fined up to 4% of their global revenue if they are found to have failed to safeguard user data. Facebook disclosed the breach within the 72 hour window mandated by the law, but it is unclear if they will be able to show that they adequately safeguarded user data.

EU regulators have yet to impose fines under GDPR, potentially making Facebook the first real-world use case of the data protection framework. Commentators believe it is possible that regulators will use Facebook as a standard setting case, as they look to foster strict compliance moving forward. In order for Facebook to be liable, investigators will have to establish the company was negligent when it came to the establishment and implementation of security practices.

Given that the breach also compromised third-party apps and services that use Facebook Login, there are lingering questions, and complexities, as to whether these third-parties could be liable if additional information was exposed on these platforms as well. How the European Union’s new privacy regime responds to the breach will have wide-ranging consequences both for Facebook and other companies far into the future and will most likely determine the data landscape moving forward.

Facebook finds itself at the heart of the global debate on data privacy and security after years of sailing unperturbed by user concerns. The winds have changed, as tech giants have gone from lauded and nimble innovators akin to the pioneers of the early twentieth century in the collective consciousness, to being scrutinized as potentially harmful and unscrupulous entities that rival the size of nation-states and whose behavior holds the potential for impacting the lives and well-being of billions around the globe. As these startups have morphed into economic giants with user bases that rival the sovereign states that seek to regulate them, whose fortunes have been built on the oil wells of data, their futures rest on the concerns of consumers and policymakers and their response to them.